Information

- LReLU (Leaky Rectified Linear Unit) is a type of activation function used in deep learning models, particularly in convolutional neural networks (CNNs).

- It is similar to the ReLU (Rectified Linear Unit) activation function, but it allows for a small, non-zero gradient when the input is negative.

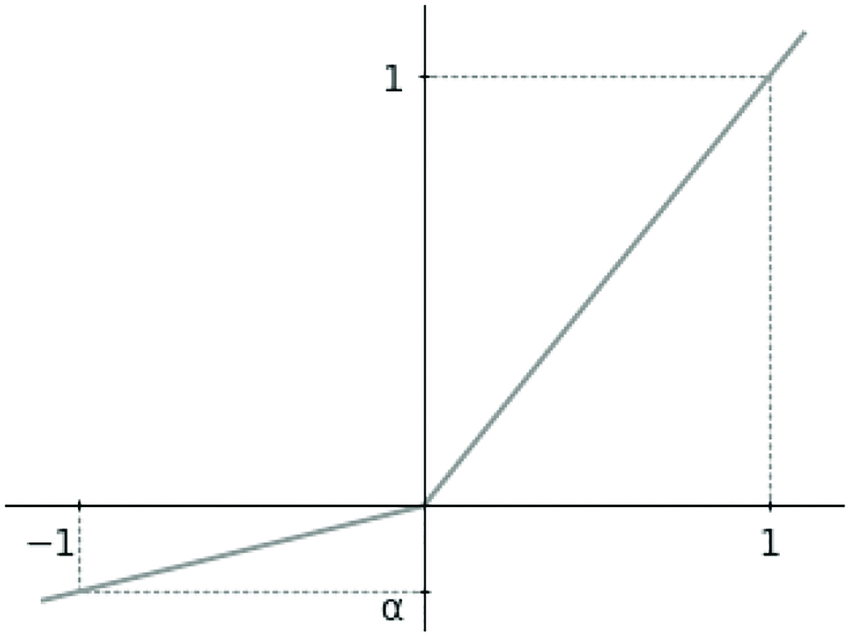

- The LReLU function is defined as f(x) = max(ax, x), where a is a small constant that is usually set to a value between 0.01 and 0.3.

- By allowing for a non-zero gradient when the input is negative, LReLU can help to prevent "dying ReLU" problem, which occurs when the gradients of ReLU units become zero, causing the corresponding neurons to stop learning.

- LReLU has been shown to perform better than ReLU on some image classification tasks, especially when the dataset is small.

- However, LReLU can introduce some non-linearity and non-convexity in the function, which can make it harder to optimize the network.

'Zettelkasten > Terminology Information' 카테고리의 다른 글

| PReLU (Parametric Rectified Linear Unit) (0) | 2023.02.26 |

|---|---|

| CVRMSE (Coefficient of Variation of the Root Mean Squared Error) (0) | 2023.02.25 |

| SELU (Scaled Exponential Linear Unit) (0) | 2023.02.24 |

| Gradient vanishing (0) | 2023.02.24 |

| Bagging (Bootstrap Aggregating) (0) | 2023.02.23 |

댓글