Information

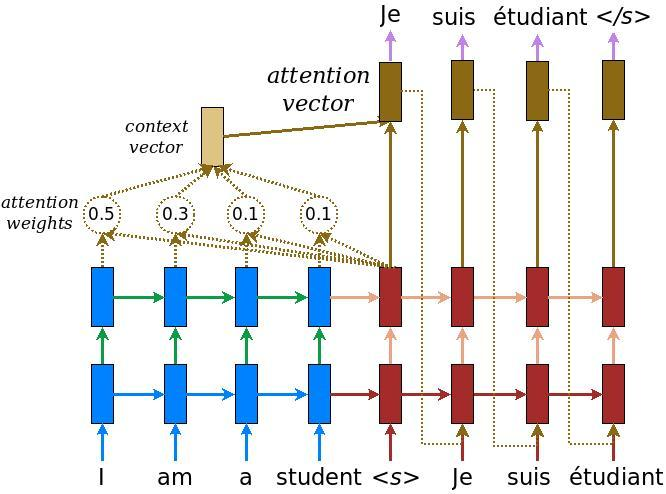

- Attention mechanisms are widely used in machine learning and natural language processing tasks to help model understand the relative importance of different parts of the input.

- The attention mechanism works by computing the similarity between a "query" and "key" vectors to determine the importance of the key for the given query.

- In an attention mechanism, the query vector represents the current state of the model or the current input, while the key vector represents the stored representation of the input.

- The dot product of the query and key vectors is computed, and the result is passed through a softmax function to obtain a probability distribution over the keys.

- The probability distribution over the keys is then used to compute a weighted sum of the corresponding value vectors to obtain the output of the attention mechanism.

- The attention mechanism allows the model to selectively focus on the most relevant parts of the input, making it useful for tasks such as machine translation, where the model needs to selectively attend to different parts of the input sentence.

'Zettelkasten > Terminology Information' 카테고리의 다른 글

| Cold-start problem (0) | 2023.03.20 |

|---|---|

| ARMAX (AutoRegressive Moving Average model with eXogenous inputs) (0) | 2023.03.19 |

| CNN-LSTM (0) | 2023.03.18 |

| DA (Domain Adaptation) (0) | 2023.03.18 |

| CNN (Convolutional Neural Network) (0) | 2023.03.17 |

댓글